The Executive-Coder Experiment: Returning to Code with GenAI After 7 Years

“How hard is it to code a project from scratch?”—easier and more fun than expected!

But let’s start at the beginning.

Who am I?

I’m a CTO leading a 250-strong engineering organization at Enpal, a GreenTech unicorn in Germany.

I’m also an engineering executive who hasn’t coded for 7 years.

Actually, that’s a lie! I did spend two hours three years ago helping a friend with Google Sheets automation. Total time around code, even reading it? Less than 20 hours in five years.

At the same time, I am what you might call a tech native. I’ve been programming since I was 10. For 20 years, I’ve lived and breathed engineering, whether writing complex Scala code or designing geographically distributed systems that tangle intimately with the CAP theorem.

I lean on my technical background frequently in leadership work—whether setting tech strategy, sparring on architecture proposals, or defining post-mortem standards.

Enough about my background. Still curious? Check out my LinkedIn.

Why code?

As a CTO leading sizable organization, my role is centered on strategic initiatives and organizational leadership, leaving no time for coding myself. It is, however, my responsibility to understand engineering work, and coding is at the heart of it.

I’ve been following Generative AI developments closely for the last two years. I’ve also been using ChatGPT regularly, mostly for writing support and research.

Despite my background in machine learning, I’ve wrestled with understanding GenAI’s true value in software development. It’s useful, no doubt. But for what tasks? How much does it boost productivity? Where does it fall short?

Human vs AI /take 1

So, I ran an experiment. I wanted ChatGPT to generate Scala code similar to what I used in my conference demo on type classes in 2016. Here’s the prompt I used:

> Please provide a fully-functional Scala code for implementing

type class that represents statistical library with 5-10 most common

statistics methods that abstracts away the implementation of numbers

Quite a mouthful. I noticed that generated code didn’t support custom numeric types, so:

> Please extend it so it can work with any number type, not only

with Numeric from Scala, but any custom type that implements basic

arithmetic, regardless of how the concrete operators and methods are called

And that was it! 123 lines of non-trivial Scala code. Reading through, I didn’t spot any issues with the implementation.

I chose this test because type classes are an advanced concept and Scala implementation relies on the Scala-only mechanism of implicits.

I hadn’t expected ChatGPT to succeed with no guidance other than the high-level requirements in two prompts.

I knew then that I must understand GenAI capabilities to support software development!

Human and AI /take 2

I set out to have an experiment with practical results. So, I chose to built a simple homepage.

I added constraints to make it interesting:

- A programming language I haven’t used before

- A cloud environment I’m not familiar with

- Production-grade quality standards

- Professional development workflow

Without knowing what to expect from GenAI while coding, I chose vanilla ChatGPT-4o.

It was Saturday morning, and I was ready to start.

Okay, cut! Cut! Results first! Some readers are executives, so outcomes first!

Uncovering the Outcomes

The experiment was a success! This executive was a productive developer with the help of GenAI. I finished the project in 28 hours while adhering to the self-imposed constraints.

The outcomes:

- A homepage with a custom domain is online: www.ivankusalic.com. This is where you are right now!

- Subscribing for the upcoming newsletter works.

- The code is thoroughly tested with unit tests and integration tests.

- The project is ready for real-world use, with features like logging, HTTPS enforcement, simple rate-limiting, input validation, and user input sanitization.

The development experience was smooth overall. Only about 5 of the 28 hours were frustrating, mostly spent debugging dependencies. The rest was genuinely enjoyable—I even found it hard to step away.

And to say that ChatGPT was “useful” is an understatement!

What I Learned Along the Way

Let’s dive into the insights from this experiment.

ChatGPT Capabilities

- ChatGPT proved tremendously useful in hands-on development. Though GenAI has limitations, it’s a powerful productivity boost. Without it, I’d have likely spent three times longer on the project—and with far more frustration.

- ChatGPT required direction for non-trivial development tasks. I had to stay clear on my goals, plan each next step, and track open issues to make the most of it.

- It needed guidance on what mattered. For instance, it didn’t suggest sanitizing user input, even when prompted for security improvements. While I was impressed by the testing scenarios it generated, it still missed a few critical ones.

- ChatGPT had a habit of hallucinating. It often forgot content it had just helped create, used placeholder references, or brought back outdated elements we’d already moved past. Amusingly, it kept generating tests that its own code couldn’t pass.

- However, hallucinations were less of an issue than I expected—they were easy to catch. Only once or twice did it take me 10 minutes to spot one.

- A bigger issue was its tendency to shift directions unpredictably. Especially during debugging, it kept suggesting new approaches—like switching libraries or changing dependency management.

- It frequently suggested suboptimal code changes to solve immediate issues—like adding unnecessary dependency injection in production code just to bypass a broken test setup.

- It tended to add complexity with little payoff. For example, when doing simple email validation, it kept trying to complicate the regex.

- ChatGPT missed intricate bugs. For instance, it couldn’t catch invalid pattern matching when extracting values from a POST request.

- At times, it missed even trivial bugs, like a DNS entry where the Host accidentally included the full domain.

Development Workflow

- An apt analogy for working with GenAI: it’s like driver/navigator pair programming, with the human in the navigator’s seat and the AI taking the wheel.

- My seven-year-old development workflow remained largely unaffected. I relied on familiar tools and habits: heavy local Git use for work-in-progress, driving implementation with positive tests, adding negative tests, then refactoring—all while navigating the codebase with Vim and terminal.

- I learned not to just accept its suggestions but to narrow down the approach when facing issues, with clear instructions when the approach is a constraint that needs to be satisfied.

- Cutting down the noise benefited both human and the AI—like focusing on one test or bug at a time.

- The main workflow change was frequent copy-pasting to and from the ChatGPT app. I rarely used the browser for research or debugging and visited StackOverflow only twice.

- It was a big help with visual design, an area I’m neither skilled in nor enjoy. It saved time and delivered better results than I could on my own.

- Another area where it shined was in writing tests. It was excellent at generating unit tests and fairly effective for integration tests. Hopefully, as an industry, we can finally agree that tests are not just vital—they’re now absolutely worth the effort!

- ChatGPT was helpful but unpredictable in debugging. I developed a pattern: I’d let it attempt debugging 2-3 times. If that failed, I’d shift to understanding the issue more deeply myself, either guiding it with more specifics or resolving the problem solo.

- It was least helpful with refactoring. Partly, this was due to the back-and-forth copying with the ChatGPT app—a gap that specialized tools may bridge. Still, complex refactoring needs a broader perspective, so I don’t expect a quick fix from tools alone.

Personal Insights

- Getting back into programming was surprisingly easy, even after 7 years. ChatGPT made the process less frustrating, though I’m confident I could have completed the project solo with similar results. It just would have taken longer.

- Compared to traditional coding, working with AI is just as engaging but less frustrating. The satisfying elements remain: problem-solving, software design, and driving engineering quality. But some of the annoyances—like boilerplate code, endless debugging searches, and sifting through low-quality articles—are reduced.

- Overall, I found AI less frustrating than expected. Hallucinations were a smaller issue than anticipated, and even the copy/pasting felt manageable—I was still faster with AI than I would have been without it.

- One pattern I noticed: my prompts grew more concise over time. While debugging, I often just pasted exceptions without any added context.

Questions for the Road Ahead

After concluding the experiment, I have a clearer understanding of what GenAI can and cannot do currently. However, I’m far from having all the answers. Here are some open questions:

- What is the productivity boost when programming regularly? It’s likely to be smaller, as writing code or handling recurring issues will often be quicker to address manually.

- How effective is GenAI with larger codebases, especially for newcomers? I haven’t explored this at all given the nature of the project.

- How differences among languages, frameworks, and libraries manifest in the development workflow with GenAI? I’ve only explored using basic HTML, CSS, and JavaScript.

- How should organizations integrate GenAI to increase developer’s productivity?

- Even—what is the future of the software developer’s role?

The Bottom Line?

Overall, I’m pleased with the experiment—the project outcome exceeded my expectations, and I gained concrete insights into using GenAI in software development.

One thing is clear: as an engineering executive, I can’t leave GenAI adoption to chance—the impact is too profound. Moving forward, my role will include not only enabling engineers to use GenAI effectively but also setting standards and processes to maximize its benefits. I don’t know exactly how that will take shape yet, but some ideas are already forming.

Does this experience change how I see my role as CTO? It does not. With or without ChatGPT, I’m still responsible for 250 people, so I won’t be coding anything soon.

Would I want to return to programming over being an engineering executive? A resounding no—I thrive on the impact, strategy, problem-solving, and the people side of my role. If anything, using JavaScript as a backend language put Ivan the Developer to rest more effectively than management ever could.

(Read on to dive deeper into the journey throughout this experiment. Otherwise, thank you for reading!)

Update: I’m exploring how engineering organizations can effectively adopt AI for software development productivity in my follow-up article “Strategic AI Adoption: Engineering Organization Transformation”. Check it out!

From Concept to Code

Back to beginning. This was to be the GenAI-powered experiment, and that’s how it started.

Choices first

I kicked it off with ChatGPT to decide on the tech stack—Vercel with Jekyll. The exploration phase spanned 11 interactions and covered setting requirements, evaluating hosting platforms, diving deeper into Vercel features, and considering future scalability.

As I’ve used ChatGPT for research in the past, no surprises here.

> Time used: 1h. Total time: 1h. ChatGPT usage: Main research tool.

Laying the Groundwork

It took about half an hour to set up a clean environment. Here’s a rough outline of the steps:

- Created a new SSH key and added it to Github

- Set up my old dotfiles

- Set up the newest version of Ruby via Brew

- Created a new Jekyll project

- Created new repo and made the initial commit

So far so good. Very minimal support from ChatGPT—only one question during SSH key generation.

I chose a development environment with the command line and Vim—a comfortable choice since I use iTerm regularly and Vim my go-to editor. Thinking about it, this setup might be a bit quirky given my role.

Having my old .gitconfig made me happy—it felt like I’ve last coded weeks

and not years ago. It felt comfy, familiar, professional.

> Time used: 30min. Total time: 1h30min. ChatGPT usage: Minimal research support.

And We’re Live!

Four commits later, I had a clean Jekyll setup for a one-page website, without that pesky footer.

Next, I focused on getting the homepage live quickly. Small iteration and incremental releases and all that good stuff!

This was straightforward but took a while. I had to figure out how to override Jekyll defaults and understand the project structure, which meant a bit of digging into the gem structure of Jekyll’s Minima theme. Nothing too complex as I did a lot of Ruby years ago.

As the page was served locally, I focused on deployment. This part was a

breeze. After creating a Vercel account and a new project linked with my

GitHub, it only took one git push and the static page was live. With a

glorious "TEST homepage" to show for my efforts!

It took 10 minutes to setup a custom domain – trivial as I already had the domain. Now the “TEST homepage” was live on my domain!

I tested the page on both desktop and mobile. On mobile, an unsightly hamburger menu was tarnishing my pristine “TEST homepage”, so I removed it. I also updated the message to “The page is currently under construction. Please come back soon.” and called it a day.

That afternoon, I turned to ChatGPT a few times for trivial questions rather than Googling. I was still getting back into using Git locally, but it already proved valuable when I went down a wrong path with the setup.

> Time used: 4h30min. Total time: 6h. ChatGPT usage: Light research support.

One-page Site

A new day, and still the weekend! Time to create a static page I’m not embarrassed to show to people.

This was me and ChatGPT all the way.

Copywriting took about an hour, followed by a couple more hours of tweaking to get the design I liked. This was my first time using ChatGPT for HTML and SASS styling. Initially, it dumped everything into a single file, index.markdown, so I guided it to extract parts of HTML to fit Jekyll’s structure, using the Minima theme as a blueprint. I did the same with styling, going from inline CSS to modular SASS that Jekyll supports.

This was something I would struggle with on my own—I never delved deeply into CSS, nor do I have an eye for design. But working with ChatGPT made it straightforward.

A particularly noteworthy moment came when I wasn’t sure how to structure links and a photo into a header section. I’d seen a clean, minimalist one-pager a friend used, so I simply asked ChatGPT:

> I want to have a profile picture as circle on the left side of header

information. Similar as placement on: {link-to-friends-page}. Please provide

content/code for jekyll's index.markdown

Wow, the generated code was exactly what I wanted.

In this session, I was fully pairing with ChatGPT. It was delivering what I asked for, and I was tweaking it further. The only tedious part was the copy-pasting to and from the ChatGPT app.

Adding a favicon took longer than expected—full 45 minutes. Generating it was easy, but getting it to display properly was frustrating. The culprit? Caching. I was reminded of the old saying: “There are only two hard things in Computer Science: cache invalidation and naming things.” I found it both ironic and reassuring that my first unexpectedly time-consuming task was a caching issue. Some things haven’t changed in the last seven years, after all.

It was late, but I had a live website I’d feel good about sharing.

> Time used: 3h. Total time: 9h. ChatGPT usage: Full-on pairing.

Towards Newsletter

The work week had started, so my first few free hours didn’t come until late Tuesday evening.

I knew the next topic was to prepare for a newsletter, as I plan to create one in the near future. The first step would be to let people show interest by sharing their email address.

This was a fun session. First, I researched, with ChatGPT, what to use for storing subscriber data.

I chose Vercel’s KV store as it was directly supported by Vercel and was a proper store to integrate with.

I needed to choose a programming language for serverless functions and settled on JavaScript. Initially, I considered Ruby, but I know Ruby well, whereas I’ve only dabbled in JS a few times. Plus, JS felt like a better challenge—as a developer, I’ve always preferred strong type systems, and JS is the exact opposite: a weakly typed, dynamic language, infamous for silent error handling. I even resisted the urge to use TypeScript. After all, I was looking for a challenge.

After choosing JS, I did a quick, 10-minute ChatGPT-guided introduction to the necessary tools to set up a local environment. Installing NodeJS was straightforward, and I was off to a solid start.

Even writing the integration test for the KV store was easier than expected. The only hiccups were related to npm and dependencies. Oh well, another thing that didn’t change with time.

ChatGPT was invaluable for setup, tooling, and debugging both code and dependencies.

I went to bed quite late, but with a sense of accomplishment. After all, I was successfully writing, reading, and deleting from the KV store in my toy integration test.

> Time used: 4h. Total time: 13h. ChatGPT usage: Continuous usage for research and pairing.

Mounting Frustration

Next, I carved out time on Thursday evening.

This time, my goal was to add subscription functionality and connect it to the KV store.

I started by asking ChatGPT what information to store about a newsletter subscriber. It suggested a lot I didn’t need, but also covered everything I did. I settled on the following data format:

key: subscriber:email

data: {

email: email

first_name: firstName

subscription_date: subscriptionDate

referral_source: "ivankusalic.com"

}

Next, I worked on the function that stores a subscriber in the KV store:

async function addSubscriber(email, firstName)

With ChatGPT’s help, I first created the function. The code was straightforward, but I appreciated that it handled details like using the YYYY-MM-DD format for the subscription date.

Next, I asked it to generate tests, and it provided two positive and one negative integration test:

it('should successfully add a subscriber')

it('should overwrite an existing subscriber when adding with the same email')

it('should handle trying to add a subscriber with invalid email')

I repeated the process for getSubscriber and deleteSubscriber functions,

then confirmed directly in the KV store that each function made the expected

changes.

Next, I created a simple helper function for basic email format validation, using regex. This was all ChatGPT; I only rejected using any third-party libraries.

I also requested detailed unit tests, and ChatGPT generated 19 of them. Here, ChatGPT hallucinated a bit—it created reasonable examples of ill-formatted emails and negative tests, but a few were beyond what the simple regex could handle. I opted to keep the regex straightforward and dropped three overly ambitious tests.

Finally, I spent most of my time creating a serverless function to expose the Subscribe API. In hindsight, this turned out to be the most challenging part of the entire experiment.

The first implementation ChatGPT provided was basic, so we iterated to improve it. Once again, I opted not to add external dependencies. The result was still robust, including input validation, simple rate-limiting, HTTPS enforcement, and logging.

That wasn’t the hard part, though. Getting the tests to run was a true exercise in frustration. I learned that JS can either use require or import/export syntax for dependencies—but they can’t be mixed. I kept running into exceptions and ended up pasting them directly into ChatGPT. It struggled to resolve the issues, and we went through various testing libraries in search of a fix.

Eventually, I gave up on mocking altogether and switched to pure integration tests for my new API, writing directly to the KV store. I managed to get these tests working, though I couldn’t replicate internal server errors in an integration test.

Overall, it was a frustrating experience. It highlighted some limitations of ChatGPT—it kept switching between libraries and flipping between dependency management approaches. It also required more guidance, requiring me to reason through the code and issues in greater detail.

This might sound negative, but considering I hadn’t used JS seriously before, it was manageable. While frustrating at times, it would have taken me much longer without ChatGPT.

> Time used: 4h. Total time: 17h. ChatGPT usage: Debugging and code generation, frustrating.

Subscriptions Up and Running

The work week was over, and the Friday evening session was more fun.

I added a newsletter section to the page, quickly styled the form asking for first name and email, and added client-side JavaScript to send the data to the Subscribe API I created before.

Since I’d already used ChatGPT for styling, everything went smoothly. Even calling the API worked almost on the first try. There was just one bug—a wrong API path for the serverless function, another ChatGPT hallucination which took five minutes to fix.

After that, I made a few smaller refactorings and called it a day.

> Time used: 3h. Total time: 20h. ChatGPT usage: Smooth full-on pairing.

Staying Updated: New Subscriber Alerts

On Saturday morning, I began with some light refactoring of code and tests.

I also wondered if I was finished—after all, the subscription feature was up and running

I decided to set up an email notification to a dedicated address whenever someone subscribes. This not only keeps me informed of new subscribers but also creates functionality I’ll need when the time comes to send out the newsletter.

Equally relevant, I wanted to experiment with the project a bit more. I also had a nagging awareness that I hadn’t implemented proper unit tests with mocks for my Subscribe API. However, it was such a beautiful morning that I didn’t want to dive into JS dependency hell just yet.

After a quick research with ChatGPT, I settled on using SendGrid as the service for sending out emails.

The SendGrid setup proved surprisingly frustrating. I wanted to send emails from my custom domain, but SendGrid wouldn’t recognize the DNS entries. Since this process can take a while, I wasn’t sure if something was wrong or if I just needed to wait. It turned out the DNS entries were incorrect.

Ironically, SendGrid had provided them—I had simply copy-pasted their CNAME data without modification. The issue was simple: SendGrid provided Host entries that included the full domain name instead of just the prefixes. My domain register, Namecheap, didn’t catch it, nor did ChatGPT. And since I hadn’t dealt with DNS setup in a while, I missed it too. A quick Google search helped. There might be a lesson here as one of the simpler bugs so far was the one that slipped through the cracks of three systems and one human.

In parallel, I began implementing the functionality for sending out emails. The concept was straightforward: I would send an email whenever a new subscriber clicked the submit button. This meant the Subscribe API would need to call a new API—the Send Email API.

I decided it was acceptable for this notification to fail; what mattered was that the subscriber data was stored in the KV store. So, I set about creating a function to be called from the serverless function behind the Subscribe API:

function emailMeAboutNewSubscriber(email, firstName)

Not the best name, but naming things is the second hard thing in computer science!

By now, the process felt familiar—ChatGPT and I were iterating on tests and implementation in parallel.

The most interesting development was that I successfully implemented unit tests for the new functionality with proper mocks. It took effort, but this time I didn’t waver. ChatGPT remained stoic as always.

Emboldened by this success, I turned my attention to the issue that had defeated me on Thursday: transforming those Subscribe API integration tests into proper unit tests. It was bloody work. ChatGPT was confused. I was confused. We were mocking functions, then modules, and then even more modules. In the end, it all came together, and I learned more about JS module loading mechanisms than I ever wanted to know.

After a bit more work, the emails started arriving in my inbox as expected.

> Time used: 6h. Total time: 26h. ChatGPT usage: Winning together against the mocks.

Finishing Touches

It was time for the final tweaks—adding analytics support and sanitizing user input.

After one last deployment, the experiment came to a close.

> Time used: 2h. Total time: 28h. ChatGPT usage: Pairing for the win.

Story in Numbers

Project timeline:

- 8 days, spanning two weekends and 3 workday evenings

- 28 hours total, including 3 hours of copywriting

The stack:

- ChatGPT - GenAI support for research and coding

- Vercel - cloud environment serving the webpage

- Vercel services - KV store, serverless functions, analytics

- Namecheap - domain name register

- SendGrid - email delivery service

- git/Github - version control

- Jekyll - static page generator

- JavaScript - backend programming language

- NodeJS - local JavaScript runtime

- Vim - editor/IDE

- iTerm - command line interface

The codebase:

- Frontend: 7 custom HTML files, 3 custom SASS files, 1 custom JS function

- Backend: 2 serverless JavaScript APIs and 7 supporting functions

- Tests: 51 unit tests and 9 integration tests

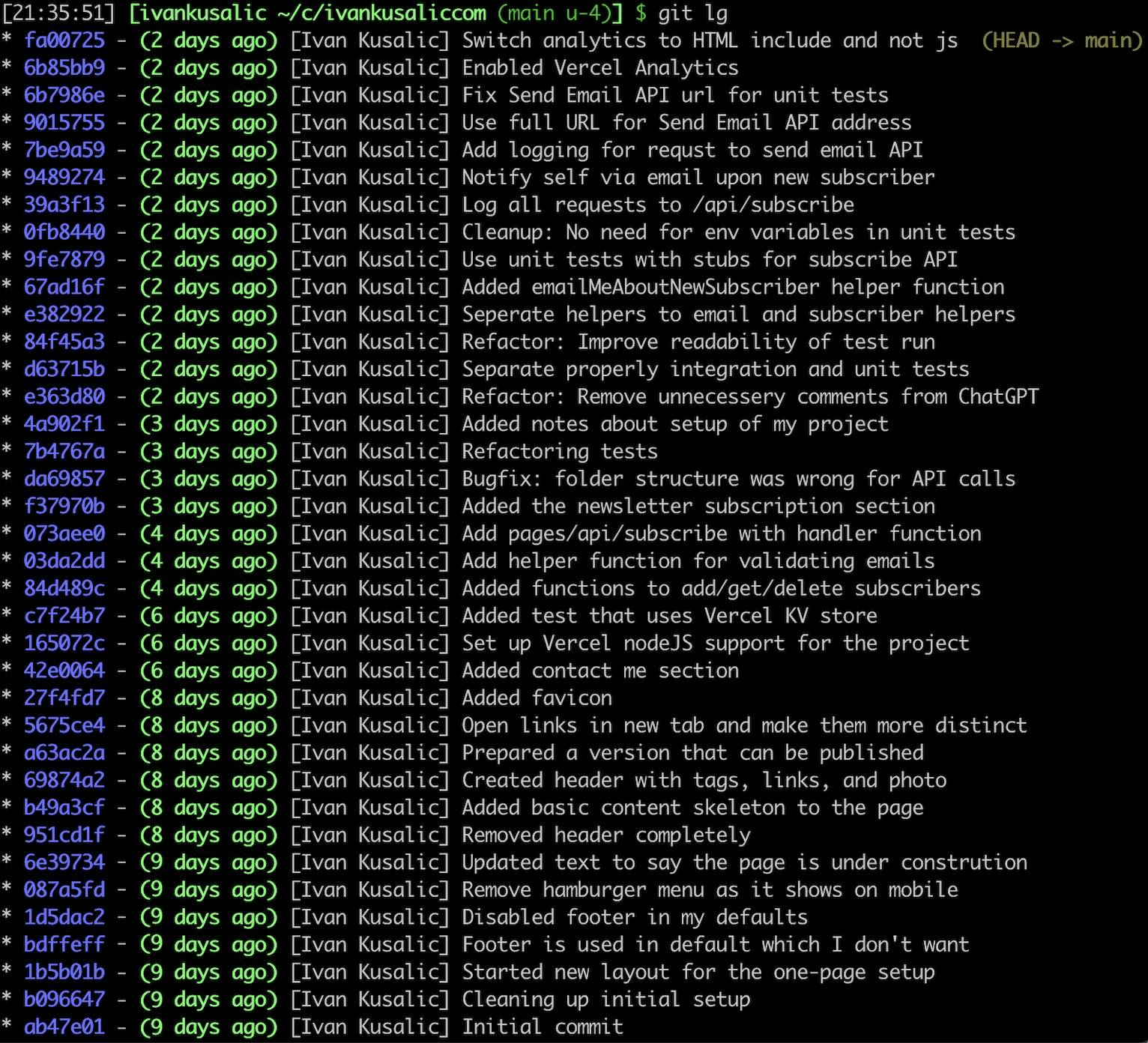

Here is the git log of my commits during the experiment:

That’s it! Thank you for reading!

Enjoyed this post? I’d love to send you more like it. Join the newsletter: